by Amruta Vidwans

Learning a musical instrument is difficult. It needs regular practice, expert advice, and supervision. Even today, musical training is largely driven by interaction between student and a human teacher plus individual practice session at home.

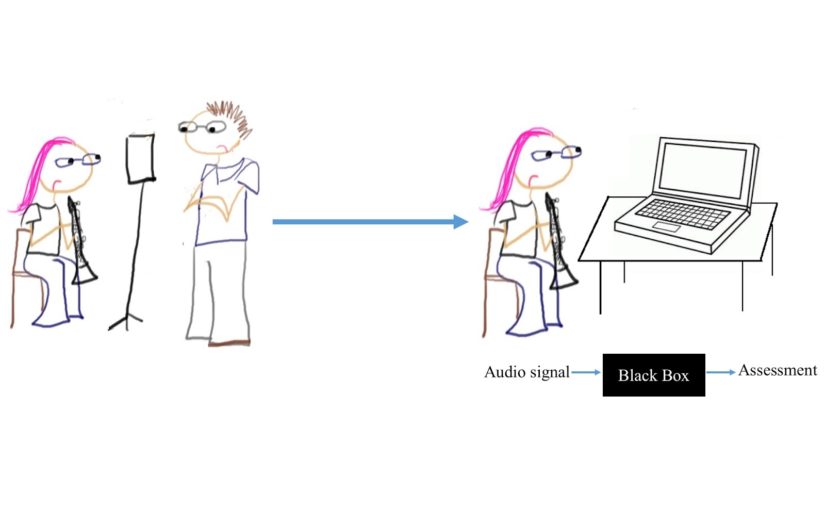

Can technology improve this process and the learning experience? Can an algorithm perform an assessment of a student music performance? If yes, we are one step closer to a truly musically intelligent music tutoring system that will support students learn their instrument of choice by providing feedback on aspects like rhythmic correctness, note accuracy, etc. An automatic assessment is not only useful to students for their practice sessions but could also help band directors in the auditioning and (pre-)selection process. While there are a few commercial products for practicing instruments, the assessment in these products is usually either trivial or opaque to the user.

The realization of a musically intelligent system for music performance assessment requires knowledge from multiple disciplines such as digital signal processing, machine learning, audio content analysis, musicology, and music psychology. With recent advances in Music Information Retrieval (MIR), noticeable progress has been made in related research topics.

Despite these efforts, identifying a reliable and effective method for assessing music performances remains an unsolved problem. In our study, we explore the effectiveness of various objective descriptors by comparing three sets of features extracted from the audio recording of a music performance, (i) a baseline set with common low-level features (often used but hardly meaningful for this task), (ii) a score-independent set with designed performance features (custom-designed descriptors such as pitch deviation etc., but without knowledge of the musical score), and (iii) a score-based set with designed performance features (taking advantage of the known musical score). The goal is to identify a set of meaningful objective descriptors for the general assessment of student music performances. The data we used covers Alto Saxophone recordings of three years of student auditions (Florida state auditions) rated by experts in the assessment categories of musicality, note accuracy, rhythmic accuracy, and tone quality.

| Label: Musicality | E1 | E2 | E3 | E4 |

| Correlation (r) | 0.19 | 0.49 | 0.56 | 0.58 |

Our observations (as seen in Table 1) are that, as expected, the baseline features (E1) are not able to capture any qualitative aspects of the music performance so that the regression model mostly fails to predict the expert assessments . Another expected result is that score-based features (E3) are able represent the data generally better than score-independent features (E2) in all categories. The combination of score-independent and score-based features (E4) show some trend to improve results, but the gain remains small, hinting at redundancies between the feature sets. With values between 0.5 and 0.65 for the correlation between the prediction and the human assessments, there is still a long way to go before computers will be able to reliably assess student music performance, but the results show that an automatic assessment is possible to a certain degree.

To learn more, please see the published paper for details.

Header image used with kind permission of Rachel Maness from http://wrongguytoask.blogspot.com/2012/08/woodwinds.html